Message Lifetimes and Faulty Clocks

In this post, we'll explore the scenario of expiring messages, and the problems associated with accurate timekeeping between mutliple computers. Next, we'll demonstrate a few possible failure cases caused by clock drift, and present a several options for working around these problems.

Sample Use Case

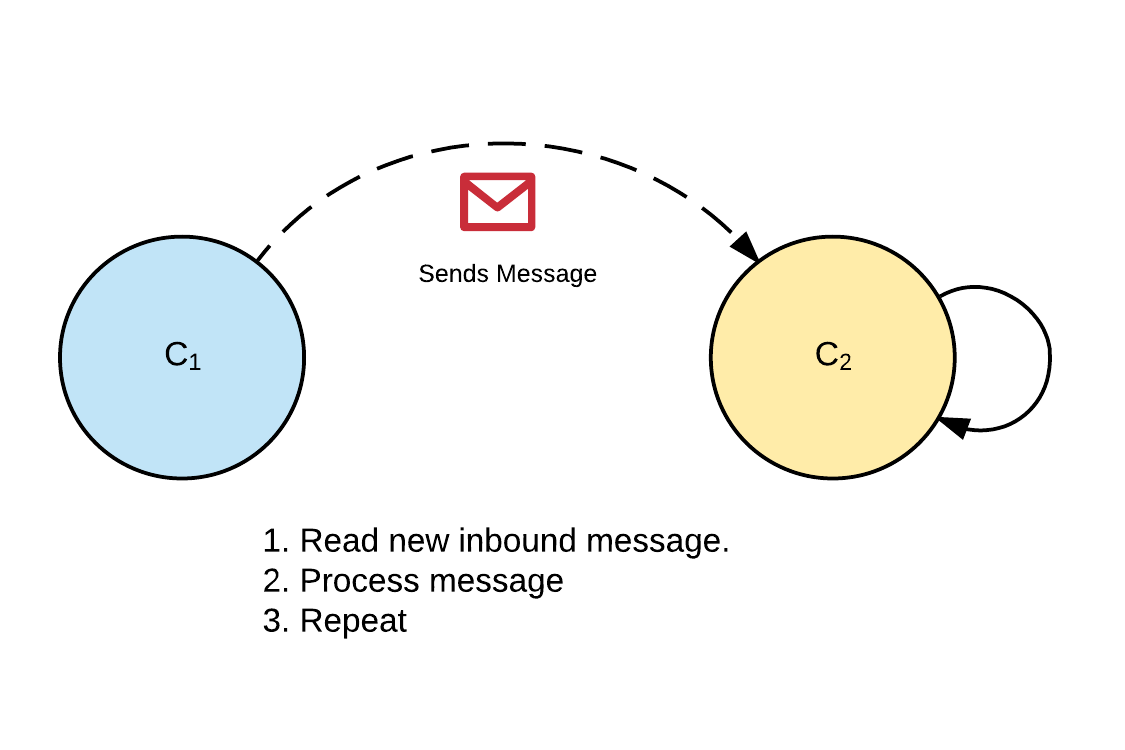

To begin, lets assume two computers (labeled C1 and C2 below) connected together on a network. C1 will send a simple message to C2 asynchronously (not waiting for a response) with one simple requirement: If C2 cannot process the message before it is 5 minutes old, it should ignore it.

If we consider what such a message may look like, we can imagine a set of fields similar to the following:

class ExampleMessage

{

DateTime TimeSentUtc { get; set; }

byte[] Data { get; set; }

}

Here, we can see the message contains an opaque piece of data (the byte[] property), and a timestamp indicating when the message was sent. We can also imagine the receiver logic to correctly process this message, based off of our business requirement to not process a message that is older than 5 mintes:

// ...

if ((DateTime.UtcNow - nextMsg.TimeSentUtc) > TimeSpan.FromMinues(5)){

// Ignore message - it's expired

} else {

// Read + process message

}

// ...

Seems fairly straightforward, but if we take a step back we can see that this simple design has a some troublesome built-in requirements. First, the code assumes that both machines have nearly perfrect time synchronization, demonstrated by comparing timestamps that originated on different machines. Secondly, we're assuming that time only moves forward by positioning the nextMsg.TimeSent as numerically less-than DateTime.UtcNow. While these may seem reasonable, lets see how these assumptions can break down.

A Question of Time

Accurate clocks are critical to determining the order of operations and establishing proper relationship between events. Log file entries, database tables, and emails are all marked with (and ordered by) timestamps, so ensuring reasonable accuracy is fundamental to the behavior of many applications.

While it is true that every operating system and/or framework gives you some ability to know what time it is, that reference is only as good as the underlying hardware clock (either physical or virtual) and the time source used to synchronize it. Some examples of the challenges with keeping time are:

- Virtualization can cause guest OS clocks to slow down/speed up based on load.

- Modern datacenters run much warmer than you would expect, and this can impact the physical quartz crystal rates.

- NTP allows time adjustments that actually can jump backwards, casuing repeats of timestamps.

- Leap time can "smear" time and can also cause moments in time to repeat (i.e., duplicate timestamps).

- Many cloud environments (such as Azure and AWS) do not provide SLA's on clock synchronization between machines.

- On Windows, SNTP typically can't provide accuracy better than 1 or 2 seconds (or worse). Note that Windows Server 2016 has made improvements in this area, provided appropriate network symmetry.

- Client or edge devices (such as smartphones, tables, Arduino, Raspberry Pi, etc.) are notorious for low (or none) synchronziation frequencies, leading to much higher levels of drift.

- Network perimeter hardening can inadvertently block NTP traffic, making synchronization impossible.

All of these factors can result in strange effects for programs that make the unfortunate assumption that time flows linearly forward. For example, CloudFare was impacted by leap second adjustments causing certain types of DNS resolutions to fail. The Orleans team identified several runtime issues caused by clock drift that impacted correct functionality. Google's Leap Smear efforts (while effective) highlight that clock rates can change frequently, both speeding up and slowing down over periods of time. All of these factors point to a simple fact: relying on perfectly synchronized clocks between multiple computers will end with pain and dispair.

Failure Cases

There are two failure cases we need to consider in this example: client time shifting, and server time shifting. If the client time shifts backwards, this will make messages appear "older" than they actually are, causing them to be dropped. The same condition happens if the server time drifts forward. If we flip these cases around, we see messages may actually live longer than expected if the client time drifts forward (or server drifts backward).

To visualize these issues, let's utilize a fairly simple test program (C# example shown below). This program will continuously measure how "old" a message timestamp is (every second), and compare it to the system clock; if the message timestamp is beyond the example threshold, the program will print 'EXPIRED':

static void Main(string[] args)

{

var messageTimeStamp = DateTime.UtcNow;

var expireAfter = TimeSpan.FromMinutes(5);

var timer = new Timer((state) =>

{

var currentAge = DateTime.UtcNow - messageTimeStamp;

Console.WriteLine($"Message is now {currentAge.TotalMilliseconds} ms old");

if (currentAge > expireAfter)

{

Console.WriteLine("EXPIRED!!!");

}

});

timer.Change(TimeSpan.FromSeconds(1), TimeSpan.FromSeconds(1));

Console.ReadLine();

}

While this program is running, we'll make adjustments to the system to clock to demonstrate the issues that can occur. We're going to amplify the effects by moving the clock by hours each time, but note these same issues will also occur on much smaller timescales (minutes, seconds, or even milliseconds). With these parameters in mind, here is an example trace while the system clock is being adjusted:

### NOTE: Default system clock (this is normal)

Message is now 1001.045 ms old

Message is now 2001.693 ms old

Message is now 3002.3793 ms old

Message is now 4002.5631 ms old

Message is now 5003.4379 ms old

Message is now 6003.8685 ms old

Message is now 7004.3192 ms old

Message is now 8004.502 ms old

### NOTE: Clock moved ahead a few hours

Message is now 10804538.4192 ms old - EXPIRED!!!

Message is now 10805539.4194 ms old - EXPIRED!!!

Message is now 10806540.4248 ms old - EXPIRED!!!

Message is now 10807541.4255 ms old - EXPIRED!!!

Message is now 10808541.8356 ms old - EXPIRED!!!

Message is now 10809541.9583 ms old - EXPIRED!!!

Message is now 10810542.5662 ms old - EXPIRED!!!

Message is now 10811542.8391 ms old - EXPIRED!!!

Message is now 10812543.2585 ms old - EXPIRED!!!

Message is now 10813544.2933 ms old - EXPIRED!!!

Message is now 10814544.527 ms old - EXPIRED!!!

Message is now 10815545.1764 ms old - EXPIRED!!!

Message is now 10816545.7657 ms old - EXPIRED!!!

### NOTE: Clock moved back several hours

Message is now -43188641.6532 ms old

Message is now -43187641.1251 ms old

Message is now -43186640.9049 ms old

Message is now -43185640.6506 ms old

Message is now -43184640.4691 ms old

Message is now -43183639.6284 ms old

Message is now -43182639.2505 ms old

Obviously, the concept of "How old is this?" is much more difficult once we no longer have stable clocks. Notice how it is possible to end up with "negative" time differences, which can easily cause havoc downstream (similar to the CloudFare issue linked above). Alernatively, we can end up discarding messages that seem to be expired (but clearly shouldn't be).

Possible Solutions

The first option for solving drift between a sender and receiver is to simply no longer rely on timestamps from the sender. In this model, the client only sends the TTL duration, and the receiver stamps the message on arrival. Then, this can be compared to the current time on the receiver and, if beyond the TTL duration, the message can be expired. This allows us to move the "IsExpired" check inside the message itself, as shown below:

class ExampleMessage

{

TimeSpan TtlDuration { get; set; }

DateTime MessageReceivedUtc { get; set; }

byte[] Data { get; set; }

IsExpired {

get {

return ((DateTime.UtcNow - MessageReceivedUtc) > TtlDuration);

}

}

}

This is a simplification of the solution the Orleans team chose to use to address their issues. Note, however, that clock drift during processing can still yield incorrect results; but we are no longer relying on synchronization between machines.

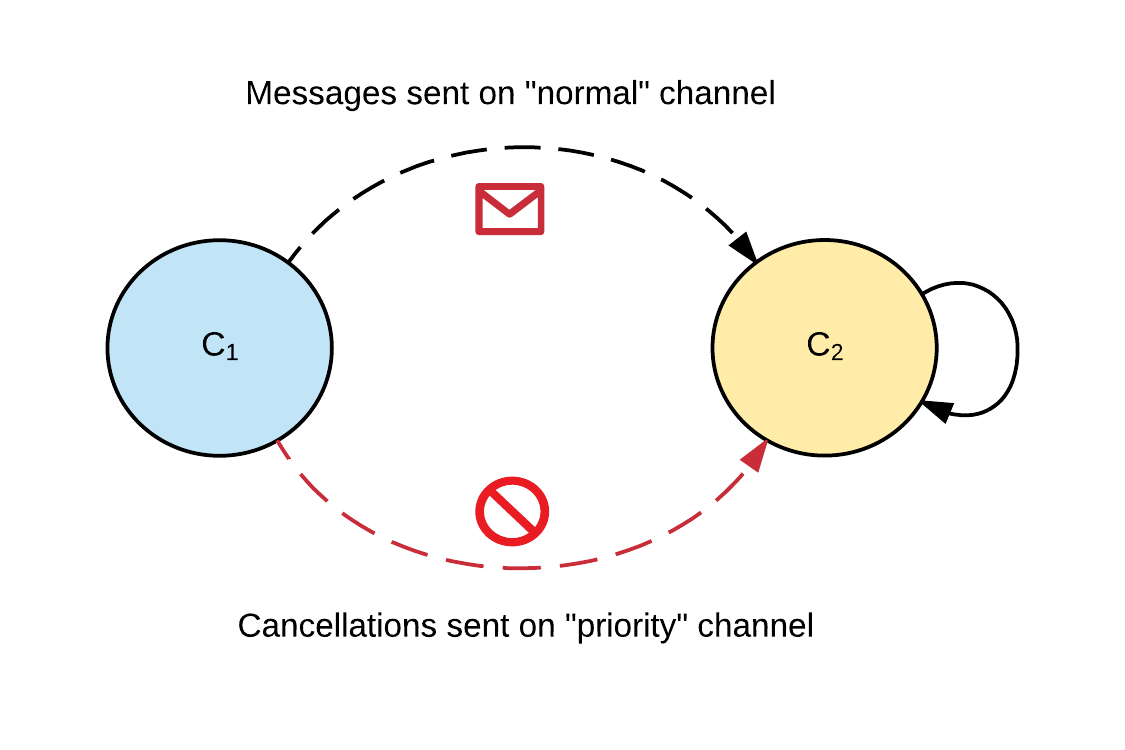

Another approach is to make the sender an active participant in expiring / removing work that it deems expired. This is built on the notion that clients are also part of any distributed system. Here, we would implement a second message channel (essentially a priority queue) for senders to notify receivers that an previously sent message should not be processed, as shown below:

This implementation relies on the client remaining active during the processing period, so this won't be suitable for scenarios where clients may disconnect (or be killed) after sending messages. However it does enable the client cancelling messages for other business-logic related reasons (beyond TTL expiration).

Finally, you can use a brokered messaging platform, such as Azure Service Bus. This doesn't completely solve the problem, but it some cases may offload the handling of message lifetimes to the broker, removing complexity from your application. Note however, that for brokers that enable partitioned receivers there may still be drift between individual receivers as there are no gaurantees on time sync between the independent resources handling the partitions.

Conclusions

As we've shown above, time synchronization is fundamental to many implicit behaviors we have come to rely on. This is especially important when we start exchanging messages with other computers, including those we don't directly control (such as smartphones, tables, and IoT edge devices). When designing an application messaging strategy, be sure to consider the impacts of clock drift, and make sure you application is able to handle the various types of oddities that can result.